| Index | Recent Threads | Unanswered Threads | Who's Active | Guidelines | Search |

| World Community Grid Forums

|

| No member browsing this thread |

|

Thread Status: Active Total posts in this thread: 14

|

|

| Author |

|

|

themoonscrescent

Veteran Cruncher UK Joined: Jul 1, 2006 Post Count: 1320 Status: Offline Project Badges:

|

I can't find the answer I need on this forum or in fact on google.

----------------------------------------I have installed an ATI 6570 2gb and have just started receiving GPU's but they are using 1 CPU for the entire length of the work unit (10 minuets approx). I have previously read that GPU will use 1 CPU for a short time at both the beginning and end of the GPU work unit, but I haven't read that it will minus off 1 CPU permanently? Is this normal or should it give the CPU back?, if so, whats causing this and how to fix it? I7-870 (x8 wu's running). 8GB Ram. Win 7 Prof 64bit. Boinc 7.0.28. HD 6570 2GB Turks. Cheers.   |

||

|

|

pirogue

Veteran Cruncher USA Joined: Dec 8, 2008 Post Count: 685 Status: Offline Project Badges:

|

They use your GPU + 1 CPU per WU. What you're seeing is normal.

----------------------------------------Most people also running CPU WUs have changed it so that 1 CPU is available to the GPU WUs. [Edit 1 times, last edit by pirogue at Oct 17, 2012 11:17:07 PM] |

||

|

|

themoonscrescent

Veteran Cruncher UK Joined: Jul 1, 2006 Post Count: 1320 Status: Offline Project Badges:

|

Cheers for the quick response, puts my mind at rest, last thing I wanted was to have a whole load of errors.

----------------------------------------   |

||

|

|

pirogue

Veteran Cruncher USA Joined: Dec 8, 2008 Post Count: 685 Status: Offline Project Badges:

|

You're welcome.

---------------------------------------- |

||

|

|

Former Member

Cruncher Joined: May 22, 2018 Post Count: 0 Status: Offline |

I tried an app_info.xml file to allot less than a full core, and it just leads to slowing everything down. Until the developers optimize the program to use less CPU, we are stuck with needing a full CPU core for each GPU.

|

||

|

|

Former Member

Cruncher Joined: May 22, 2018 Post Count: 0 Status: Offline |

Until the developers optimize the program to use less CPU, we are stuck with needing a full CPU core for each GPU. I think that some sub-routines are better suited to being processed by a CPU. The other side of this is that not all routines is suitable/ideal for processing by a GPU. Thus, the need for a CPU thread from an otherwise all-work-exclusively-for-the-GPU.As far as reserving a CPU thread is concerned, I see BOINC as treating a GPU-WU's CPU-part just like any other CPU thread. A cruncher need not pre-reserve a CPU-thread for HCC-GPU-WU. Here's what I observed happens: If a HCC-GPU-WU does not have a CPU-thread, it pauses one CPU thread to free that thread for the GPU's use. The paused CPU-thread will soon get its thread from the earliest CPU-based WU to complete. At this point, the reservation is now complete: N-1 CPU-threads reserved for CPU-based WUs while 1 thread reserved for the CPU-part of the HCC-GPU-WU. If any CPU-based CPU-thread is paused, another CPU-based WU will get the CPU-thread; and if a GPU-based CPU-thread is paused, another GPU-based WU will get that CPU-thread. ; [Edit 1 times, last edit by Former Member at Oct 21, 2012 6:51:22 PM] |

||

|

|

Former Member

Cruncher Joined: May 22, 2018 Post Count: 0 Status: Offline |

I've seen the same behaviour as you described, andzgrid.

My hope is that the code for HCC will evolve like some other projects, like Collatz Conjecture, where you can run 2 or 3 units per CPU core because it doesn't need to be running something all the time that ties the core up 100%. This is the CPU setting portion of my Collatz app_info.xml file: <app_version> <app_name>collatz</app_name> <version_num>203</version_num> <plan_class>cuda</plan_class> <avg_ncpus>0.013</avg_ncpus> <max_ncpus>1</max_ncpus> <flops>1.0e11</flops> <coproc> |

||

|

|

mmstick

Senior Cruncher Joined: Aug 19, 2010 Post Count: 151 Status: Offline Project Badges:

|

Collatz Conjecture is really simple math that can be easily accelerated entirely on a primitive graphics card with minimal CPU interaction, while a project like this is much more complicated. I doubt there are much changes they could do to lessen the number of cycles required on the CPU end of things, but I'm interested in why you say one full core is used all the time, on my FX-8120 the cores are only fully utilized when doing the start/end points, aka the CPU phases, and during the GPU phase there is minimal calculations happening (20% per core).

In short, you will need a HSA GPU with a HSA app before CPU load can be reduced. |

||

|

|

Former Member

Cruncher Joined: May 22, 2018 Post Count: 0 Status: Offline |

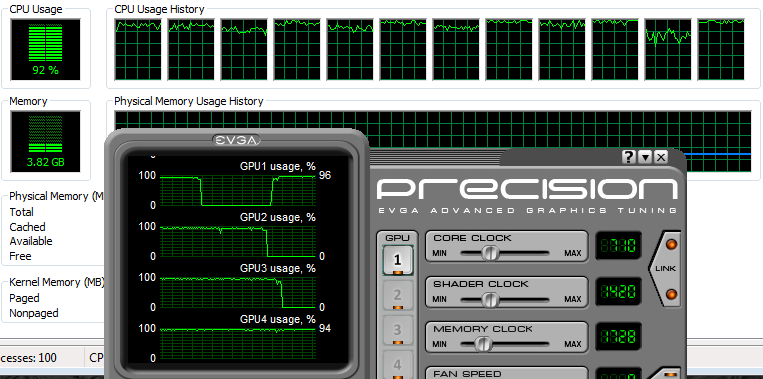

This image shows what I am talking about. I have an i7-980X and 2 GTX 590s with 12GB memory. You can see that even though two of the GPUs are in full usage there is no drop in CPU usage. I have noticed that those talking about CPU usage dropping have both AMD CPUs and GPUs, which leads me to believe that there is something very different between the two type of setups.

|

||

|

|

Former Member

Cruncher Joined: May 22, 2018 Post Count: 0 Status: Offline |

Know one thing for sure, that with Hyperthreading [Intel!] all unused cycles go to use towards other CPU tasks when the load of the physical cores exceed 100%, meaning if a GPU task is not using all CPU cycles, other tasks benefit. I've tested this last week. AMD has no Hyperthreading, so suppose that an idle thread is an idle thread, so that would be the the difference.

----------------------------------------EDIT: BTW, think programs like Process Lasso can tell which BOINC task/process is using which core [the affinity]. Tools like that can force for multiple GPU tasks to share the same CPU threads, as can it be used to force the full CPU tasks to use cores that you don't want the GPU tasks to run on. Else, think BOINC is automated to spread to just the next available idle thread for a task, and for the rest, is oblivious of outside hands forcing 1 or more onto a single CPU core. Been there done that [had 3 HPF2 running off 1 thread], a FAAH on a second thread and 2 cores idle on the quad [Q6600 has no HT]. [Edit 1 times, last edit by Former Member at Oct 22, 2012 12:37:55 PM] |

||

|

|

|